Site navigation seems like something you shouldn’t have to think about, but its importance to search engine optimization (SEO) is often underestimated.

Your main goal is to present certain information to your customers, so it’s easy to get lost in thinking that it doesn’t matter how you structure that information.

However, your site navigation plays a number of different roles in providing data to search engine crawlers and ensuring usability, therefore making it a crucial element for development in your SEO strategy.

The term “site navigation” can actually refer to multiple components of a site.

First, it usually refers to the main navigation bar on a given website, often found running across the top of the screen.

It can also refer to the overall sitemap of the domain, including links not found in that header bar.

Confusing things even further, “site navigation” can even refer to how easily a user can travel throughout your site and find the information s/he is seeking.

To simplify things, in this article we’ll refer to site navigation as the overall structure and navigability of your website.

Table of Contents

The Importance of Sitemaps and Crawlers

To understand the mechanics that dictate why site navigation links are important, we first have to understand crawlers.

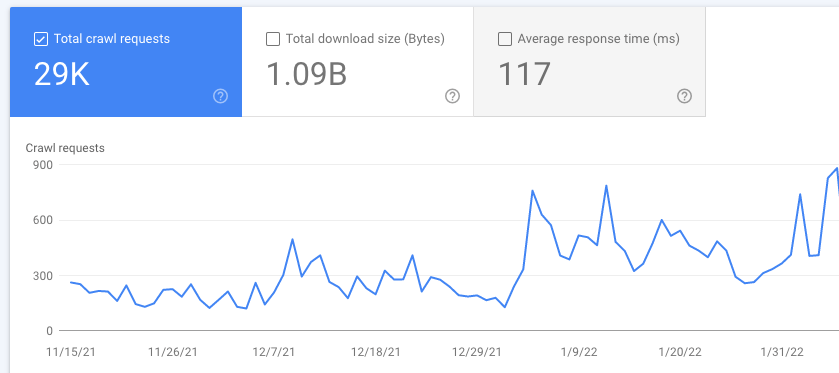

Google Search Console (GSC) can provide crawl stats on your site, letting you know areas that could use improvement.

If you’ve been working in SEO for more than a month, you’re probably at least fleetingly aware that crawlers are automated indexing units that scour the web for structured data.

Web crawlers, also called spiders, are bots that systematically scour the internet for content and download copies of web pages to add to a search engine’s index.

How do search engine crawlers know where to find web pages? They start with a list of seed URLs, which can come from a variety of sources, including sitemaps. As each web page is crawled, the bot identifies all the hyperlinks on that page and adds them to the queue.

Google, in particular, has several invisible crawlers constantly discovering new information on the Internet.

Since crawlers consume resources on the systems they visit, some people block or limit their access. For example, people who don’t want their site crawled add special code to their robots.txt file to tell bots what they can and can’t index. Sometimes they block entire directories.

However, blocking crawlers can prevent portions of your site from being indexed in Google.

In order to generate the most relevant search results, search engines need to have a vast store of accurate, up-to-date information about the pages on the web.

Crawlers ensure the legitimacy of this data, so if you stop crawlers from being able to do their job, you run the risk of having your pages left out of this massive store of information.

On the other hand, if you can help crawlers do their job, you’ll maximize the number of pages they’re able to see on your site, and will thus maximize your presence in search engine indexes.

To maximize crawler efficiency, first you need to make sure your website is free from Flash and JavaScript.

These are old-style web formats that were once popular due to their flashy appearances, but their structure makes it almost impossible for crawlers to digest.

It’s better to use more modern, crawlable schemes using XHTML and CSS.

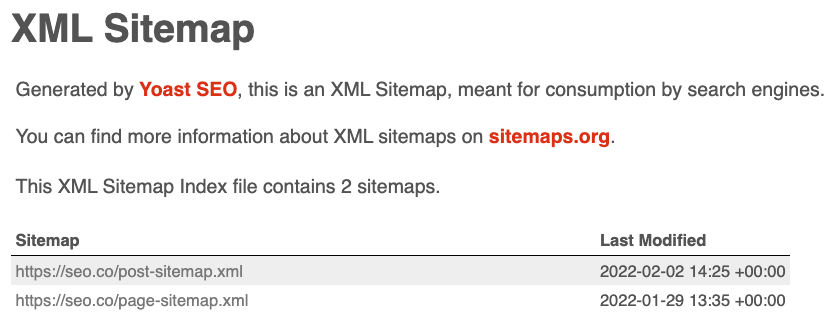

Building an XML sitemap is a must if you want crawlers to read your site in full.

There are many free tools available that can help you build an XML sitemap, such as XML-Sitemaps.com, but it’s better if you put it in the hands of an experienced web developer.

Once complete, you can upload your sitemap to Google Search Console (GSC) and place the file on your site, directly off the root.

Having a properly formatted XML sitemap, in addition to maintaining a crawlable site, will ensure your pages are fully indexed.

Site Depth

The depth of your site is also an important factor for navigation.

If you’re engaging in a content marketing strategy, “depth” might seem like a good thing.

After all, the deeper your content goes, the more likely it is that you will be seen as an expert and that you’ll attract a wider audience as a result.

However, “depth,” as it applies to websites, is actually a bad thing.

The depth of a website is related to the complexity of links that lead to a certain point.

For example, let’s say your website has 50 pages.

The home page and nine other pages are immediately available on the top header.

However, in order to access the remaining 40 pages, you need to click into one of those initial 10.

The name of the game is K.I.S.S. (keep it simple stupid). Stick to “meat and potatoes” content. That’s why we only have two sitemaps, giving the crawlers less steps to get to the entirety of our site’s content.

Some of those pages actually require a specific clicking order (such as Home > Products > Tables > Wooden Tables) in order to be reached.

This is considered a “deep” site.

Shallow sites, on the other hand, offer multiple pathways to each page.

Instead of mandating a directional flow like the example above, a shallow site would have many pages pointing to each page in the hierarchy (Products, Wooden Tables, etc.).

Shallow sites and a simple sitemap make it easier for users to find what they are looking for, and as a result, shallow sites get a small boost in domain authority.

Restructuring your site to avoid unnecessary depth can give you more authority and more ranking power.

You might be wondering if you should just link more pages in your main menu. Although this would certainly make your content easily accessible to crawlers, it will create a frustrating user experience. Your main menu should only give visitors the most important links. Having too many choices and multiple sub menus can make people bounce.

Create a text sitemap for your visitors

In addition to an XML sitemap for search engine crawlers, like GoogleBot, it’s crucial to have an HTML text-based sitemap for your visitors. Having a strong search function is great, but sometimes people just want a list of categorized content with direct links.

Visitor sitemaps help people find what they’re looking for when they can’t find it in your main menu or through your search function. Think of it as a table of contents for your entire website, much like the TOC in a book.

The larger your website, the more important it is to have a text sitemap for your visitors. Even the most well designed and highly organized websites need a backup directory for visitors who can’t find something.

Text sitemaps for visitors also help search crawlers discover your web pages. Although crawlers will sift through links in your XML sitemap, crawlers prefer discovering content through HTML. If they skip something in your XML sitemap, they’ll grab it through the text version.

Sitemap bests practices (according to Google)

- Stick to Google’s size limits. For most sites, you won’t need to worry about size. If your site contains more than 50,000 links, however, you’ll need to create multiple sitemaps and submit each one. Alternatively, you can create an index for your sitemaps and submit just that index.

- Sitemap location matters. First, make sure you submit all of your sitemaps to Google. If you don’t submit your sitemap through Search Console, it will only impact files and folders descending from that parent directory. This is why it’s a good idea to always place your sitemap in your root directory.

Use absolute URLs. Throughout your website, you probably use relative URLs, like “/product.html.” For your sitemap, all URLs should be full and absolute. For example: https://www.mywebsite.com/product.html.”

For a full breakdown on sitemap best practices, Google has created detailed documentation that explains everything as well as how to submit your sitemaps.

Structuring Your URLs

The structure of each individual URL in your website is also important for SEO.

While most modern SEO strategies steer away from optimizing for specific keywords in the body content of web pages, including pages with relevant keywords in the URL is a sound strategy that won’t run the risk of being flagged for keyword stuffing. Make sure your pages are specifically described in your URLs (such as “marketing services” instead of just “services”) to maximize your searchability, especially for small business.

As a rule of thumb, the more descriptive, short and accurate your URL slug is, the better.

For example, on our own site, we went from a URL structure like this:

to one that looked like this:

https://seo.co/content-marketing/roi/

The SEO-friendly way of completing this involved:

- Changing the permalink URL slug

- Creating 301 redirects from the old slug to the new slug, ensuring no redirect chains existed

- Updating all internal links with a “find and replace” search to the database to ensure no redirects existed on our own site

- Updating external links pointing to that post (where possible)

Structuring your URLs in a way that caters to search engines is relatively easy, especially if your website is built in a content management system (CMS) like WordPress.

WordPress and other major platforms have special settings that keep your URLs optimized automatically.

For example (in WordPress), under Settings > Permalinks, you’ll be able to automatically include your post titles as the URL of the post.

Otherwise, your URL might come out as a series of random numbers and letters.

Site Speed

Site speed is another important factor that affects your rank.

The faster a site is able to download, the faster the user can acquire information and navigate to other pages within the site.

There are many ways to improve the speed of your site, including building your site in an acceptable modern format, reducing the size of your images, and adjusting your caching settings.

Overall Usability

The bottom line for your site navigation is this: your users need to be able to easily and quickly find whatever it is they are looking for.

Optimizing your pages for this will help you improve in search engine rankings, because Google’s primary objective is to improve the overall web experience of its users.

I mentioned a lot of backend structural changes that are necessary for SEO, but the aesthetics are just as important for user experience.

Organize your information as logically as possible by breaking things out into intuitive categories and sub-categories.

Make sure your navigation bar is prominent and easy to use. Make it easy for users to find what they’re looking for—whether that means adding a search bar or helping to point users in the right direction with an interactive feature.

Navigation menu best practices

- Avoid fancy effects. You’ve probably seen navigation menus that fly out fast or create trails when you hover over the main menu item. This looks cool, but can be a huge distraction to visitors. On some devices, special effects can make the navigation menu unusable.

- Keep labels simple. Avoid getting too creative with your navigation labels. Use words and phrases most people will understand. Labels like “contact,” “about,” “services,” and “products” are perfect. A little creativity won’t hurt, as long as you keep it simple and understandable.

- Use easy-to-read color schemes. You can never go wrong with black text on a white background, or varying shades of light gray. If you’re going to use colors, have a professional designer create a color scheme for you.

Whatever you do, your first goal should be making your users happy.

If your users are happy navigating your site, they’re going to stick around.

You’ll have lower bounce rates, which will be a ranking signal to Google, and they’ll be more likely to tell their friends about the site.

After all, getting more interested buyers to your site is the most important goal for your bottom line, and improving your site navigation can do that.

Tim holds expertise in building and scaling sales operations, helping companies increase revenue efficiency and drive growth from websites and sales teams.

When he's not working, Tim enjoys playing a few rounds of disc golf, running, and spending time with his wife and family on the beach...preferably in Hawaii.

Over the years he's written for publications like Forbes, Entrepreneur, Marketing Land, Search Engine Journal, ReadWrite and other highly respected online publications. Connect with Tim on Linkedin & Twitter.

- How to Rank for Local SEO in Multiple Locations - April 16, 2024

- SEO for Mass Tort Lawyers: Everything You Need to Know - April 3, 2024

- Natural Backlinks vs. Unnatural Backlinks: How to Build a Natural Link Profile - April 1, 2024