Human beings aren’t perfect thinkers. We like to imagine ourselves as logical, straightforward problem solvers, but the reality is, most of the time we’re afflicted with hidden biases and misconceptions that completely skew our interpretations of even the most objective data.

When we look at a set of data, or observe something in its natural environment, we form concrete judgments that then shape our interactions with those items.

And from a practical standpoint, we’re good at it; the human brain has evolved to detect patterns easily as a survival mechanism.

This mechanism is oversensitive and flawed, resulting many of the biases I’m about to go over.

In our everyday lives, they might not bear much impact, but in the realm of online marketing and advertising, objective data is crucial.

If these biases are affecting your interpretations of otherwise objective data sets, you could wind up presenting faulty information to your clients, or worse, adjusting your campaign to grow in the wrong direction.

Table of Contents

Why Bias Compensation Matters

Fortunately, you don’t have to become a slave to your biases. There’s no way to reprogram your brain and avoid them altogether, but there are strategies you can put into place to make it harder for these biases to affect your work. Think of it as a kind of handicapping or adjustment system; for example, if you know the wind is blowing to the east on the golf course, you might align your shot to the west of where you want the ball to actually go. This doesn’t eliminate the wind as a factor, but it does help you get the results you need with only a minor adjustment. Otherwise, your shot will end up blowing far to the east of where you need it to land.

There’s one big problem with this golf metaphor, and that’s the fact that you’ll realize your shot is off course once you complete it; in the realm of digital marketing, if you interpret your metrics incorrectly, you may never learn this fact.

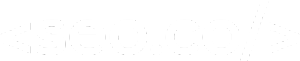

Take a look at this optical illusion as an effective demonstration of how bias can mess with your mind:

(Image Source: Nerdist)

Compare the center square of the side panel to the center square of the top panel. Most people will argue that the side square appears to be a bright orange, while the top square appears to be a dull brown. If I stopped writing here, many of you would continue to believe that.

However, the reality is that both squares are exactly the same color. If you cover up the surrounding colored squares, which trick your brain into overcompensating for lighting conditions, you’ll see this to be true. This process is the kind of “bias correction” I’m talking about; without it, you’ll end up misinterpreting your data, but with it, you can come to a more accurate conclusion.

Types of Biases

There are two types of biases I’m going to cover in this guide, though the second isn’t technically a “bias” in the formal definition. Both can have a dramatic effect on how you see and interpret data, so you’ll need to account for both whenever you measure or report metrics for a given campaign.

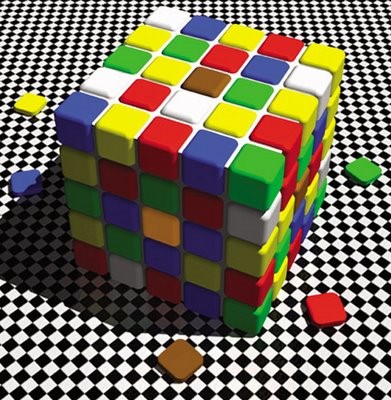

- Cognitive Biases. The first type is classic cognitive biases. These are inherent in the vast majority of the population, though they offer differing degrees of influence depending on the individual and the scenario. Think of these as situations that exploit natural, otherwise valuable processes in the brain; in the color example above, it’s a good thing that our brains can naturally account for the presence of light and shadow. However, it results in skewed perceptions. The same applies to the following example—which line is shorter?

(Image Source: Brain Bashers)

There are countless sub-types of biases, and I’ll be exploring some of the most relevant for modern marketers.

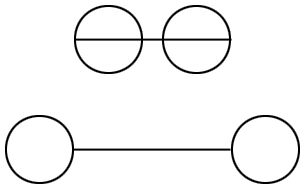

- Misconceptions and Misinterpretations. I also consider it a kind of bias that leads us to misinterpret the true “meaning” of a metric. Thanks to modern technology, we have access to far more data than we would have thought possible just a decade ago. That doesn’t mean that every data point has a clear definition, or that it’s easy to understand. Many similar metrics share similar names, but offer very distinct views on your campaign. Similarly, one miscommunication between team members can lead to very different interpretations of the same idea:

(Image Source: Connexin)

Let’s start by taking a look at some of the most common cognitive biases that can affect your interpretation of metrics.

Cognitive Biases

This list is not comprehensive; there are a startling number of cognitive biases that can affect your reasoning, social behavior, and even your memory. However, I’ve captured the majority of biases that can affect how your mind finds, dissects, and interprets marketing results. In each subsection, I’ll describe the bias and detail strategies you can use to compensate for it.

Confirmation Bias

First up is confirmation bias, one of the most commonly recognized cognitive biases. This phenomenon holds that once an individual has settled on a specific belief, they will seek out and/or favor any information that leads them to “confirm” that belief, and avoid and/or demerit any information that contradicts that belief. For example, take the strange dress that became a sensation over social media a while back:

(Image Source: LinkedIn)

The center picture is the original, with the two on either side showcasing the dress with different lighting and filters. The middle pic generated responses describing it as either gold and white or blue and black. Users that encountered one definition often saw the dress as being those colors, not realizing that the visual information in the photo was ambiguous.

In the context of a marketing campaign, this can happen when you’ve pre-formed a conclusion about one of your strategies. For example, you might assume that your new content strategy is doing well because you’ve invested a lot of time and money into it. You might then only look at data points that confirm this assumption; let’s say you’re getting a lot more comments and sparking new conversations. But you might ignore or overlook contradictory data points, such as lower organic traffic numbers.

To compensate for this, select which metrics you’ll measure to determine success before you even flesh out a strategy. Then, remain consistent with this set of metrics and remain as objective as possible in their analysis—even if the numbers contradict your instincts.

Selection Bias

The selection bias is usually relegated to surveys, which depend on an ample, random sample of participants in order to be considered unbiased and effective. A selection bias would be some improper procedure that led to a pool of participants slanting the results in one direction or another. For example, if you only interview people in Idaho for a national-level survey, you’re going to receive answers that disproportionately represent an Idaho resident.

If you’re conducting surveys for your marketing campaign (such as gathering data about your audience’s content preferences), the possible effects here are obvious—if you select a narrow or skewed pool of participants, your data will be inherently unreliable. But this also applies to data you might pull in Google Analytics.

For example, if you’re poking around to different sections, you might find that your “general” traffic visits an average of three internal pages before leaving. From this, you could form the conclusion that your site is effective at enticing people further in—but what about just your social traffic? If your social visitors often bounce after the first page, it could be an indication that your blog posts (or other social links) aren’t as effective at piquing that curiosity.

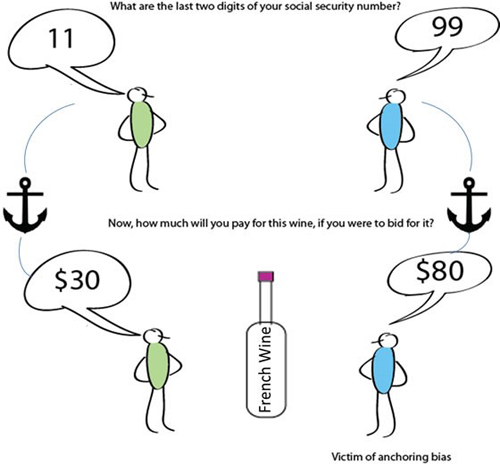

Anchoring

The anchoring effect has everything to do with what you encounter before encountering a certain event (or in this case, a certain metric). Because our minds are wired for comparisons, whenever we hear a numerical value, we instantly compare future numerical values to it—even if those numbers are completely unrelated.

Take a look at the following cartoon as an example:

(Image Source: Wealth Informatics)

Both participants are essentially generating random numbers—the last digits of their SSNs. When asked what they’d estimate for an identical bottle of wine, the person with the higher number will generally estimate it to be a higher value.

This can happen in your metrics reporting, too. For example, let’s say you recently read an article that boasted a 300 percent improvement in ROI after making a simple change to a marketing campaign. If you notice a 30 percent growth rate in your own traffic, you might think it’s pretty low. Conversely, if you hear someone complain about a terribly low conversion rate—like a fraction of a percent—that 30 percent growth figure might start looking pretty good.

Irrational Escalation

Irrational escalation, sometimes known as escalation of commitment, is a bias that has less to do with how you report or interpret metrics, and more about what you do with your conclusions from there. Under this bias, individuals have a greater likelihood of taking some strong action if they’ve taken some related weak action in the past.

The typical example is the “dollar auction” game, in which a one-dollar bill is auctioned off before a group. Anybody can bid any amount they want for the dollar. At the end of the game, the winner gets the one-dollar bill for whatever amount they bid for it, but there’s one twist—the second-place finisher must pay his/her final bid to the auctioneer without getting the dollar in return. Invariably, bids escalate far beyond the dollar value of the one-dollar bill; this is because once you’re committed to a certain idea, or a certain strategy, it’s easy to incrementally invest just “a little bit more,” even if it becomes irrational at some point.

What’s the practical takeaway here? Let’s say you’ve invested in a certain marketing strategy for many months now, and you’ve seen decent results, but the past few months have been slow to the point that you’re barely breaking even on it. The irrational escalation bias would have you continue investing in it, since you’ve already come this far, even if there is no proof of future benefits. The only way to defeat this bias is to weigh the pros and cons of each strategy, even the ones you’re used to, with objective, preferably numerical evidence.

The Overconfidence Effect

All of us are desperately and irredeemably overconfident. I’m not talking about your self-esteem or your comfort levels in various social situations; I’m talking about your tendency to estimate your own perceptions. Everyone believes they are better than average at making decisions and answering questions, in almost any scenario.

Because of this, marketers often believe they know more about data analysis than they actually do, and believe themselves to be better decision makers than they actually are. What happens is this: a marketer will look at the data, form a conclusion about it, and then stick with that conclusion without exploring any other possibilities. In general, there are too many unknowns for any one definitive conclusion to hold.

To compensate for this, bring more minds into your analysis and discussion. Each person will be overconfident about his/her own analytical ability, but together, you’ll be able to make up for each other’s weaknesses and come to a more uniform conclusion.

Essentialism

Essentialism is a complex cognitive bias that permeates our life in profound, and sometimes horrible ways. Its name derives from the root word “essence” because it reflects a natural human tendency to reduce complex topics and ideas down to their barest essence. This is important during the early stages of learning and development, where abstraction is difficult and acquisition is imperative, but later on in life, this gives us the nasty tendency to categorize things, places, and people based on what we know about other things, places, and people. It’s at least partially responsible for stereotypes and prejudices.

In a far less serious offense, essentialism is also responsible for causing marketers to over-generalize or categorize certain types of metrics. For example, they might believe that bounce rate is inherently “bad” and therefore, bounce rates should always be lower—even though people bouncing might be a good thing if they aren’t a part of your target demographics to begin with.

There’s no easy way to stop your mind from wandering in this direction, but you can strive for neutrality by treating every metric as having both positive and negative traits; see each metric for what it is without trying to reduce it to a universally “good” or “bad” position. This is especially important for traits relating to user behavior, which is qualitative and at times, unpredictable.

Optimism Bias

(Image Source: Masmi)

I think we all know what optimism bias is like. We’ve all felt it in one application or another, and most of us still experience it throughout our daily lives. No, this has nothing to do with whether you consider yourself an “optimist” or “pessimist” in general—instead, it’s a well-documented psychological phenomenon that applies to most people.

The biggest effect here is that people inherently believe they are less likely than average to experience bad events, especially if they’re rare. Most people never think they’ll be robbed, or that their house will catch fire, or that they’ll lose their job. But people still do.

In the marketing world, this usually refers to PR disasters. Most brands never give a second thought to the idea that their social media statistics are tanking because of a foolish comment they made some time earlier, or believe their drop in organic traffic could be because of a serious penalty. The fact is, these things happen, even to smart, well-planned brands and strategies. Don’t count yourself out of the possibility here.

Group Attribution Errors

The fundamental group attribution error results when you see the behavior of a single person, and immediately project that person’s traits to the entire group. For example, at a bar you might see a group of people at a nearby table and one of them is particularly obnoxious, yelling and screaming. Many would then immediately assume that the entire group is obnoxious, rather than just the one individual.

In the reporting sense, this can also apply, depending on how wide your measurements are and whether you use any instances of anecdotal evidence. For example, let’s say you wrote a knockout piece of content and a handful of users took to commenting actively on it. Generally, comments are a good sign that your piece was interesting or valuable enough for your readers to engage with, but can you make this assumption for the entire group, or was it just a handful of weirdos who you happened to snag?

This isn’t to say that small population samples are inherently useless—they can be valuable, and they can represent the whole. What’s important to remember is that they don’t always represent the whole, and you need to compensate for this by looking at bigger samples.

(Image Source: The Rad Group)

The bottom line for most of these biases is that you shouldn’t take anything at face value or trust your instincts too much. Most of your instincts are based on evolutionarily advantageous cognitive functions, which means when it comes to the logic and math of statistical analysis, our minds can’t be trust. Treat everything with a secondary degree of scrutiny.

Misconceptions and Misinterpretations

As if all those cognitive biases weren’t enough, there are cases where we don’t even define our metrics accurately. Forget confirmation bias—if you’re looking at one metric thinking it’s another, your numbers are wrong anyway. This section is designed to clear up some of the most common points of confusion for web traffic and social media metrics, but make no mistake—this is far from comprehensive. You owe it to yourself to double check your interpretation of every metric you measure; even one differing word can compromise an entire construct.

Google Analytics

Google Analytics is free, easy to navigate, and reliable, but that doesn’t mean it’s always straightforward. Take a look at some of the discrepancies you might find here.

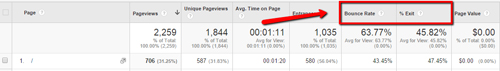

- Bounce rate and exit rate. If you try to think about it conceptually, bounce rate and exit rate sound identical. They’re even right next to each other on Analytics’s default dashboard, but as you can see below, they can be very different. Basically, the exit rate only applies to users for whom the page in question was the last of their session. Bounce rate refers to users for whom the page in question was the first and only of their session.

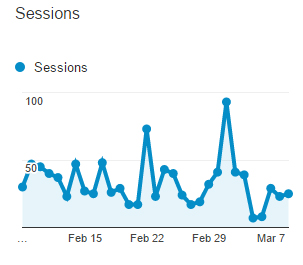

- Visitors, visits, and page views. What’s the difference between a visit and a page view? Can you tell me without basically repeating yourself? As it turns out, a “visit” occurs when a user accesses your website from an external URL and ends when that user is inactive for 30 minutes or more (or if they leave your site). A page view, on the other hand, is counted whenever a person loads—or reloads—a page on your site. Therefore, it’s possible for one experience to count as one visit and multiple views. Visits are also referred to as “sessions.”

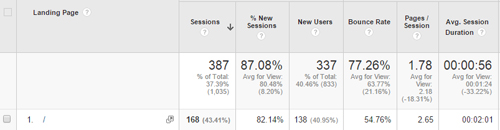

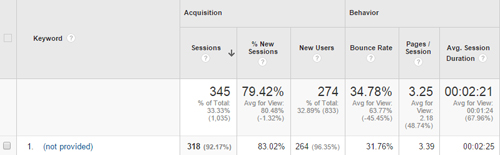

- Segmentation. It’s also easy to misinterpret defined metrics when you’ve segmented your traffic improperly (or haven’t segmented it at all). Sometimes, you’ll want to look at a “general audience,” and other times you’ll want to drill down to something more specific, like users who found you through search or through social media—but it’s important to know the difference. Take a look at how different Direct and Organic traffic results can be:

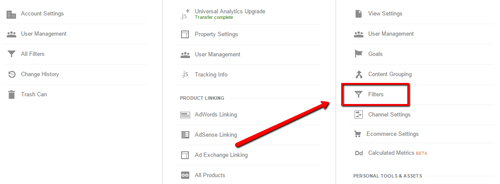

- Internal traffic. You may also be skewing your numbers by allowing internal traffic to be reported in Google Analytics. Technically, you haven’t misinterpreted the meaning of a metric here, but you might be severely overestimating how many people are actually coming to your site. Fortunately, it’s easy to set up a filter that will keep you from tracking all your coworkers and partners who access the site on a daily basis (but aren’t a part of your target demographics).

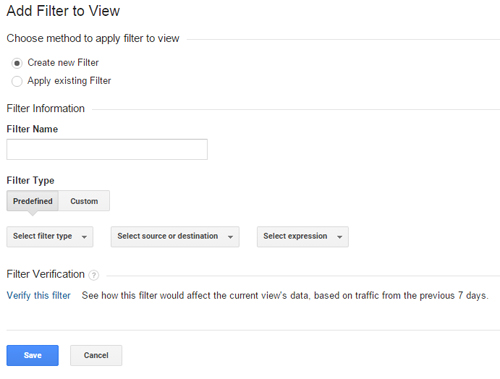

To start, head to the admin tab and select “Filters.”

This will give you the opportunity to “create” a new filter; there are several filter types to choose from, but usually you’ll want to go for one that filters users based on IP address or ISP information. This will keep Analytics from tracking information from any of the users you specify.

- The numbers game. Finally, remember that numbers are just numbers. Your bounce rate might be high, but that doesn’t mean everyone who left wasn’t interested in your page. Your click-through rates from social might be good, but that doesn’t mean people liked your content. It’s tempting to reduce everything to objective metrics, but important to recognize those objective metrics for what they are.

Social Media

Next, there are a few social media metrics that require exploration.

- Likes (or follows). No matter what platform you’re on, there’s some metric that tells you how many people are interested in your brand. Most brands thrive on this figure, either bragging about how many followers they have or complaining that they need more “likes.” However, don’t let this metric deceive you; it doesn’t accurately reflect how people actually feel toward your brand, which is far more important in the long run.

- Page Insights. Most social platforms offer page insights, or something similar, which will tell you how many people have seen or clicked through your material. Be careful here; an “impression” doesn’t always correlate with a person actually seeing your post—it just means it populated in their newsfeed. A vague “click” could mean any kind of interaction, even reporting the post. Dig into what these deceptively ambiguous metrics actually mean before drawing any important conclusions from them, and remember, every social platforms is going to be a bit different—that’s why I don’t dig into any specific platform metrics here.

(Image Source: Facebook)

- Engagements. Finally, engagements—such as post likes, shares, and comments, are all important and valuable, but don’t try and reduce them to a purely quantitative value. For example, an article that earns 1,000 shares can be considered to be popular, but this doesn’t reflect how bold an impression it made on the people who shared it—they could have shared it just because of the clever title. Similarly, don’t take comments as a sure indication that these people are fans of your brand, and don’t assume that every “like” means that someone read and enjoyed your piece. Take these engagement metrics with a grain of salt.

Comparison Errors

As a general rule, the way you compare metrics to one another holds a lot of power over the conclusions you’ll eventually reach. For example, it’s critically important for you to take “apples to apples” measurements. If you’re going to evaluate your progress in a certain area, you need to replicate your measurement conditions as precisely as possible; for example, if you’re looking at the bounce rate for organic visitors over the course of a month, you can’t compare that to the bounce rate of social visitors over the course of a different month. This is akin to comparing apples to oranges. Allow only one variable between your compared metrics, such as month in question or type of traffic—when you introduce two, the comparison crumbles.

Communication

Recognize that your communicative ability has a strong bearing on how others interpret metrics. One wrong or misleading word about how a specific metric should be read could compromise a person’s interpretation of that metric for the foreseeable future. This is especially important with clients; you want them to have the clearest, most objective view possible, so remain diligent and consistent from the beginning to give them the full and accurate picture of your marketing metrics.

Utility and Value

There are two important takeaways regarding the utility and value of measurement and analysis I need to address. Thus far, my guide may have you believing that measurements are inherently inaccurate, or that they aren’t worth pursuing, but this is far from the case. Measurement and analysis are crucial if you want your business to stay alive. What truly matters is how you approach them:

First, your measurements are only worthwhile if they’re objective. And to make things worse, it’s incredibly hard to be objective (as you’ve seen in my list of cognitive biases). If you allow your instincts or your preconceived notions to take over, then your metrics become like a mirror—you only see what you want to see. Data should be a tool for you to answer important questions, not a means of self-affirmation.

Second, don’t base everything off of numbers. The numbers are objective, that’s true, but thanks to modern technology, there are too many numbers. Data can be manipulated to tell you almost anything, and thanks to human imperfection, it’s practically impossible to ever come up with a completely unbiased, objective conclusion about anything. What’s important here is maintaining a healthy degree of confidence; feel free to use your metrics and numbers to form conclusions, but in the back of your mind should always be a shade of doubt. Analytics aren’t perfect; accept that.

Final Takeaways

Though my hope was to create a detailed and valuable guide, I know this is inherently not a comprehensive one. To create a truly comprehensive guide on human bias and the tendency for errors in marketing would require far more resources than I have and, quite possibly, more knowledge about the human mind than we currently hold.

If there’s one bottom-line takeaway from this guide, it’s this: no matter how reliable your data is, it still requires a human mind for interpretation, and human minds are fallible. You can reduce this fallibility (as you should), but you can’t eliminate it, so instead expect it, compensate for it, and don’t let it compromise your SEO link building or PPC campaigns.

Tim holds expertise in building and scaling sales operations, helping companies increase revenue efficiency and drive growth from websites and sales teams.

When he's not working, Tim enjoys playing a few rounds of disc golf, running, and spending time with his wife and family on the beach...preferably in Hawaii.

Over the years he's written for publications like Forbes, Entrepreneur, Marketing Land, Search Engine Journal, ReadWrite and other highly respected online publications. Connect with Tim on Linkedin & Twitter.

- How to Rank for Local SEO in Multiple Locations - April 16, 2024

- SEO for Mass Tort Lawyers: Everything You Need to Know - April 3, 2024

- Natural Backlinks vs. Unnatural Backlinks: How to Build a Natural Link Profile - April 1, 2024